- Home

- Publications

- Evaluating Density Forecasts: Forecast Combinations, Model Mixtures, Calibration And Sharpness

Evaluating density forecasts: forecast combinations, model mixtures, calibration and sharpness

Pub. Date

Pub. Date

Pub. Type

Pub. Type

Authors

External Authors

Wallis, K.F.

Related Themes

Macro-Economic Modelling and ForecastingJEL Code

C22, C53

Paper Category Number

320

In a recent article Gneiting, Balabdaoui and Raftery (JRSSB, 2007) propose the criterion of sharpness for the evaluation of predictive distributions or density forecasts. They motivate their proposal by an example in which standard evaluation procedures based on probability integral transforms cannot distinguish between the ideal forecast and several competing forecasts. In this paper we show that their example has some unrealistic features from the perspective of the time-series forecasting literature, hence it is an insecure foundation for their argument that existing calibration procedures are inadequate in practice. We present an alternative, more realistic example in which relevant statistical methods, including information-based methods, provide the required discrimination between competing forecasts. We conclude that there is no need for a subsidiary criterion of sharpness.

Related Blog Posts

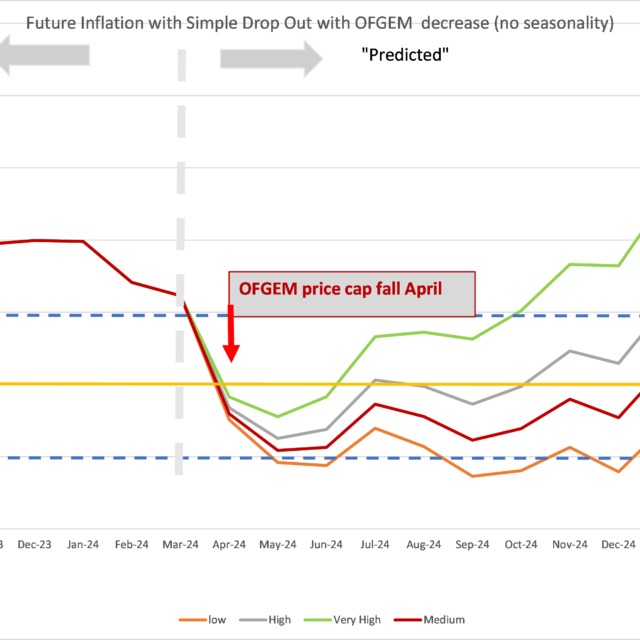

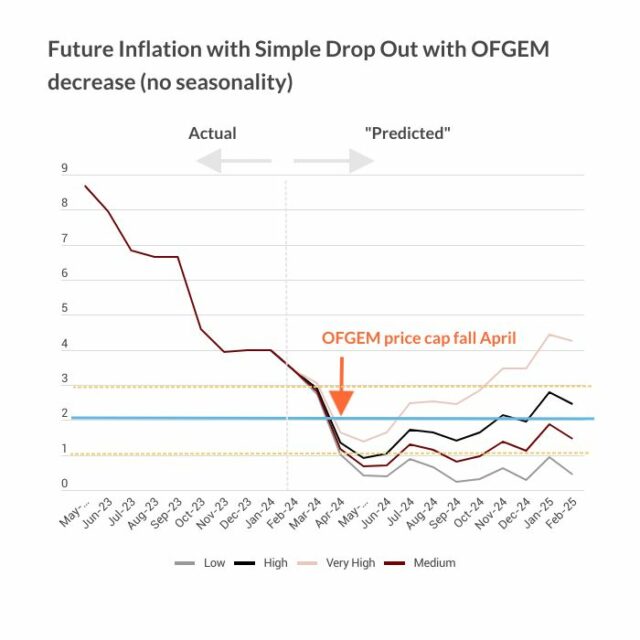

Inflation Still Likely to Fall to 2 per cent or Below Next Month

Huw Dixon

17 Apr 2024

8 min read

What is the Current State of the UK Economy?

Paula Bejarano Carbo

Stephen Millard

26 Feb 2024

7 min read

Related Projects

Related News

Why it’s not worth worrying that the UK has technically entered a recession

26 Feb 2024

4 min read

1.2 million UK Households Insolvent This Year as a Direct Result of Higher Mortgage Repayments

22 Jun 2023

2 min read

The Key Steps to Ensuring Normal Service is Quickly Resumed in the Economy

13 Feb 2023

4 min read

Related Publications

Recessionary Pressures Receding in the Rearview Mirror as UK Economy Gains Momentum

12 Apr 2024

GDP Trackers

Related events

Summer 2023 Economic Forum

Spring 2023 Economic Forum

Winter 2023 Economic Forum

Autumn 2022 Economic Forum

Summer 2022 Economic Forum

Spring 2022 Economic Forum

Winter 2022 Economic Forum

Autumn 2021 Economic Forum