Recent Developments In Economic Forecasting

Pub. Date

Pub. Date

Pub. Type

Pub. Type

Forecasts of the future values of economic variables continue to be regularly produced by professional forecasters, both produced and used by policy-makers and used, but also abused, by the media.

The National Institute's regular forecasts are prepared using its global econometric model which has, of course, developed over the years. In parallel with this development a considerable body of work has built up looking both at the uncertainty surrounding forecasts and at forecasting by means of statistical rather than structural models. The current issue of the National Institute Economic Review brings together five papers reflecting two main developments.

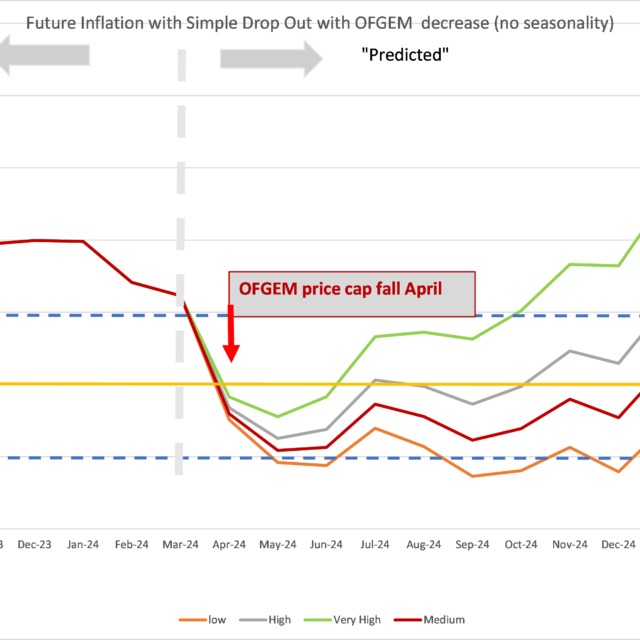

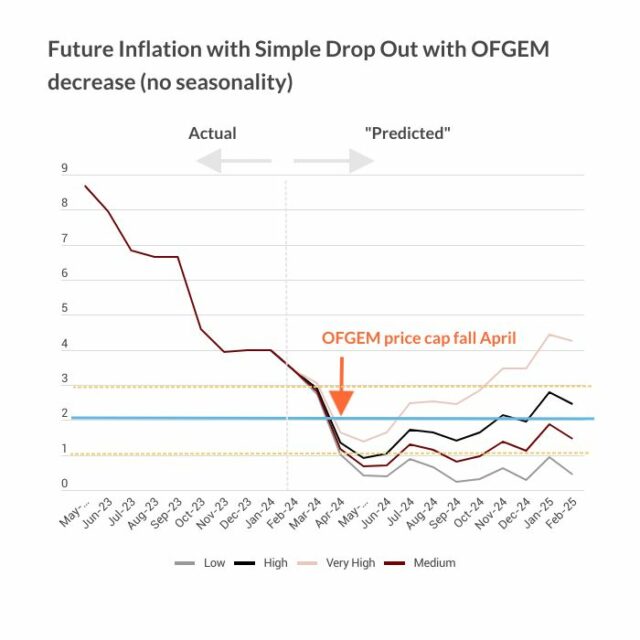

The first key development, which has affected both the production and consumption of economic forecasts, is the growing appreciation, but unfortunately not complete recognition explaining some of the aforementioned abuse, that it is not a question of this forecast proving to be 'right' and that forecast proving to be 'wrong'. Point forecasts, the traditional focus, are better seen as the central points of ranges of uncertainty. An inflation forecast of, say, 2% must mean that people should not be surprised if actual inflation turns out to be a little larger or smaller than that. Moreover, perhaps, at a time of heightened economic uncertainty, they should not be very surprised if it turns out to be much larger or smaller. Consequently, to provide a complete description of the uncertainty associated with the point forecast many professional forecasters, including as a pioneer the National Institute, now publish density forecasts or 'fan charts'. Importantly, just as point forecasts are commonly evaluated using the subsequent outturn, so the reliability of uncertainty forecasts can be evaluated.

The first paper, by Kevin Dowd, complements existing studies that have evaluated the Bank of England's fan chart for inflation ex post by evaluating the Bank's GDP fan charts. Having developed an evaluation test which aims to accommodate the expected dependence in the data used to evaluate the density forecasts, Dowd finds that the Bank's own assessment of the reliability of its forecasts is unreliable at short horizons, but is more reliable at longer horizons when evaluated against the latest, rather than first, GDP estimates.

The second paper by Gianna Boero, Jeremy Smith and Ken Wallis introduces and analyses a new dataset, the Bank of England's Survey of External Forecasters. This survey, discussed in the Bank's Inflation Report, details up to forty-eight external forecasters' density forecasts for inflation and GDP growth each quarter for the last decade. Exploiting the fact that the forecasters are asked for successive forecasts of the same event, Boero et al. use this 'fixed-event' structure to test the efficiency of the point forecasts as well as the density forecast means which need not, as they explain, be the same as the point forecasts. The forecasts are deemed 'weakly efficient' when the forecast revisions are independent of each other. Interestingly they also look at revisions to the conditional standard deviation forecasts extracted from the density forecast. They find, consistent with the 'fan' shape of density forecasts, that uncertainty is reduced and at an increasing rate as the forecaster moves closer to the target. <br> <br>The second key development, which the papers by Garratt et al., Assenmacher-Wesche and Pesaran, and Eklund and Kapetanios pick up in complimentary ways, also concerns uncertainty, but uncertainty about both the appropriate forecasting model(s) and its parameters. Data revisions, which for key macroeconomic aggregates often appear to be at least in part predictable, economists' ignorance in the face of many putatively helpful indicator variables about the Ôright' forecasting model and structural breaks all contribute to this uncertainty. These three papers all provide means of dealing with model uncertainty either through model averaging or combining information from many different indicators by forecasting using large datasets. The models they use to forecast are less structural than the National Institute's model, although they need not be atheoretical since identifying restrictions can be imposed on the statistical models. But it is fair to say that the National Institute rely to a greater extent on economic theory when 'integrating out' model uncertainty and specifying the forecasting model used to produce their point forecasts. The National Institute then derive their density forecasts by centering it on this point forecast, assuming normality and setting the conditional variance equal to the variance of the historical forecast error.

The paper by Tony Garratt, Kevin Lee and Shaun Vahey considers the increased use of so-called 'real-time' datasets in macroeconomics. These datasets contain successive vintages of data and have become an important tool when testing both economic theory and economic forecasts. This is because data revisions mean the data available today can tell a different story to the data available yesterday. Garratt et al. describe a modelling framework to accommodate this data uncertainty and in two applications advocate the production of density forecasts to reflect the different layers of uncertainty inherent in any forecast. Katrin Assenmacher-Wesche and Hashem Pesaran consider the ability of forecast combination to improve forecasts in the face of uncertainty about how the forecasting model is specified and uncertainty, given the instability of economic time-series, over which window of data should be used to estimate the model(s). They find that forecast combination helps. Interestingly, suggesting it helps mitigate the deleterious effects of structural breaks on the quality of economic forecasts, averaging forecasts from different estimation windows is found to be at least as effective as averaging over forecasts from different model specifications.

In the fifth paper Jana Eklund and George Kapetanios provide a non-technical review of various methods of forecasting with large datasets, which includes forecast combination.